We are excited to announce the four presentations scheduled for CLW 2016.

Joanne Harbluk: Cognitive Load: Assessment with Visual Behavior

Description: Presentation will introduce and discuss the problem of cognitive load during driving. Examples will be presented as well as some approaches to its assessment.

Bio: Dr. Joanne Harbluk is a Human Factors Specialist with the Human Factors and Crash Avoidance Division of Motor Vehicle Safety at Transport Canada where she investigates human-vehicle interaction with the goal of improving safety. Her current work is focused on the human factors of driver interactions with automated vehicles and on the driver-vehicle interface to assess and mitigate distraction. She is an adjunct research professor in the Psychology Department at Carleton University in Ottawa Canada, and is an associate member of that university’s Centre for Applied Cognitive Research.

Yulan Liang: Identifying Driver Distraction using Eye Movement and Glance Data

Description: This presentation describes a series of studies on developing algorithms to identify driver cognitive distraction and visual distraction using driver eye movement and glance data.

Bio: Dr. Liang is a Research Scientist in the Center of Behavioral Sciences at Liberty Mutual Research Institute for Safety since 2009. She obtained a Ph.D. degree of Human Factor in the Department of Mechanical and Industrial Engineering at the University of Iowa. She focuses her work in the areas of driver safety, performance impairment and assessment, behavior modeling, and data mining. Currently, she is investigating real-time assessment of driver performance and safety risk under various impairments and modeling driver behavior based on naturalistic driving data.

Chun-Cheng Chang: Using Tactile Detection Response Task and Eye Glance to Assess In-Vehicle Point-of-Interest Navigation Task

Description: Point-of-Interest (POI) navigation interactions allow drivers to make simple queries like “Find nearest Korean restaurant”, and the in-vehicle information system will provide a list of restaurants that the driver can navigate to. A driving simulator study was conducted to evaluate the cognitive workload with POI navigation task, using Tactile Detection Response Task (TDRT) and eye glance metrics. Using multiple performance metrics such as response time to a vibrating stimuli (TDRT), eyes-off-road glances, and secondary task performance together can provide insight on driver’s thought process and decision making for a POI navigation task.

Bio: Chun-Cheng Chang is a PhD Candidate under Linda Ng Boyle at the University of Washington. Chun-Cheng does extensive research in evaluating the cognitive workload of in-vehicle interfaces and is expected to complete his PhD in this Fall.

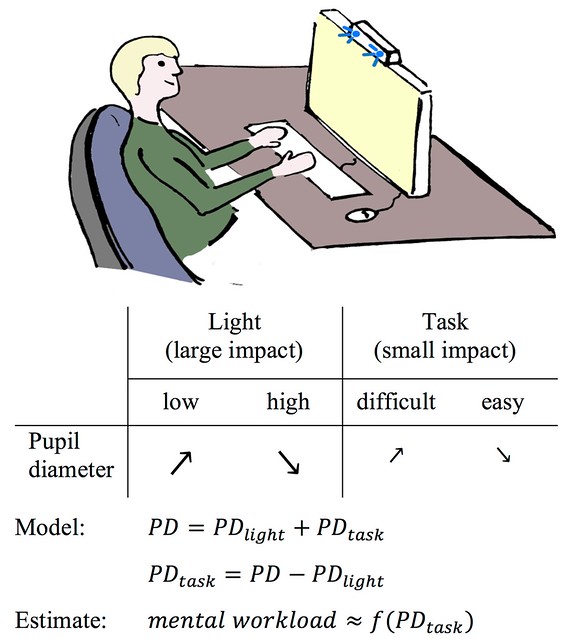

Andrew Kun: In-vehicle user interfaces: deployment, and driving simulator studies at UNH

Description: For over a decade researchers at the University of New Hampshire have been involved in the exploration and deployment of in-vehicle user interfaces. This talk will provide a short review of our efforts on deploying in-vehicle user interfaces for police through our Project54 effort. Next, the talk will discuss a number of recent driving simulator-based studies in which eye tracking data was used to estimate the visual attention of the driver to the external world, the ability of the driver to control the vehicle, and the level of the driver’s cognitive load.

Bio: Andrew Kun is associate professor of Electrical and Computer Engineering at the University of New Hampshire, and Faculty Fellow, at the Volpe Center. Andrew’s research is focused on driving simulator-based exploration of in-car user interfaces, and estimation methods of the drivers’ cognitive load to determine the effect of the user interface on the driving performance. In this area he is interested in speech interaction, as well as the use of visual behavior and pupil diameter measures to assess and improve the design of user interfaces.